def build(

inputs: Union[schedule.Schedule, PrimFunc, IRModule, Mapping[str, IRModule]],

args: Optional[List[Union[Buffer, tensor.Tensor, Var]]] = None,

target: Optional[Union[str, Target]] = None,

target_host: Optional[Union[str, Target]] = None,

name: Optional[str] = "default_function",

binds: Optional[Mapping[tensor.Tensor, Buffer]] = None,

):

"""Build a function with arguments as signature. Code will be generated

for devices coupled with target information.

Parameters

----------

inputs : Union[tvm.te.schedule.Schedule, tvm.tir.PrimFunc, IRModule, Mapping[str, IRModule]]

The input to be built

args : Optional[List[Union[tvm.tir.Buffer, tensor.Tensor, Var]]]

The argument lists to the function.

target : Optional[Union[str, Target]]

The target and option of the compilation.

target_host : Optional[Union[str, Target]]

Host compilation target, if target is device.

When TVM compiles device specific program such as CUDA,

we also need host(CPU) side code to interact with the driver

setup the dimensions and parameters correctly.

target_host is used to specify the host side codegen target.

By default, llvm is used if it is enabled,

otherwise a stackvm intepreter is used.

name : Optional[str]

The name of result function.

binds : Optional[Mapping[tensor.Tensor, tvm.tir.Buffer]]

Dictionary that maps the binding of symbolic buffer to Tensor.

By default, a new buffer is created for each tensor in the argument.

Returns

-------

ret : tvm.module

A module that combines both host and device code.

Examples

________

There are two typical example uses of this function depending on the type

of the argument `inputs`:

1. it is an IRModule.

.. code-block:: python

n = 2

A = te.placeholder((n,), name='A')

B = te.placeholder((n,), name='B')

C = te.compute(A.shape, lambda *i: A(*i) + B(*i), name='C')

s = tvm.te.create_schedule(C.op)

m = tvm.lower(s, [A, B, C], name="test_add")

rt_mod = tvm.build(m, target="llvm")

2. it is a dict of compilation target to IRModule.

.. code-block:: python

n = 2

A = te.placeholder((n,), name='A')

B = te.placeholder((n,), name='B')

C = te.compute(A.shape, lambda *i: A(*i) + B(*i), name='C')

s1 = tvm.te.create_schedule(C.op)

with tvm.target.cuda() as cuda_tgt:

s2 = topi.cuda.schedule_injective(cuda_tgt, [C])

m1 = tvm.lower(s1, [A, B, C], name="test_add1")

m2 = tvm.lower(s2, [A, B, C], name="test_add2")

rt_mod = tvm.build({"llvm": m1, "cuda": m2}, target_host="llvm")

Note

----

See the note on :any:`tvm.target` on target string format.

"""

if isinstance(inputs, schedule.Schedule):

if args is None:

raise ValueError("args must be given for build from schedule")

input_mod = lower(inputs, args, name=name, binds=binds)

elif isinstance(inputs, (list, tuple, container.Array)):

merged_mod = tvm.IRModule({})

for x in inputs:

merged_mod.update(lower(x))

input_mod = merged_mod

elif isinstance(inputs, (tvm.IRModule, PrimFunc)):

input_mod = lower(inputs)

elif not isinstance(inputs, (dict, container.Map)):

raise ValueError(

f"Inputs must be Schedule, IRModule or dict of target to IRModule, "

f"but got {type(inputs)}."

)

if not isinstance(inputs, (dict, container.Map)):

target = Target.current() if target is None else target

target = target if target else "llvm"

target_input_mod = {target: input_mod}

else:

target_input_mod = inputs

for tar, mod in target_input_mod.items():

if not isinstance(tar, (str, Target)):

raise ValueError("The key of inputs must be str or " "Target when inputs is dict.")

if not isinstance(mod, tvm.IRModule):

raise ValueError("inputs must be Schedule, IRModule," "or dict of str to IRModule.")

target_input_mod, target_host = Target.check_and_update_host_consist(

target_input_mod, target_host

)

if not target_host:

for tar, mod in target_input_mod.items():

tar = Target(tar)

device_type = ndarray.device(tar.kind.name, 0).device_type

if device_type == ndarray.cpu(0).device_type:

target_host = tar

break

if not target_host:

target_host = "llvm" if tvm.runtime.enabled("llvm") else "stackvm"

target_input_mod, target_host = Target.check_and_update_host_consist(

target_input_mod, target_host

)

mod_host_all = tvm.IRModule({})

device_modules = []

for tar, input_mod in target_input_mod.items():

mod_host, mdev = _build_for_device(input_mod, tar, target_host)

mod_host_all.update(mod_host)

device_modules.append(mdev)

# Generate a unified host module.

rt_mod_host = codegen.build_module(mod_host_all, target_host)

# Import all modules.

for mdev in device_modules:

if mdev:

rt_mod_host.import_module(mdev)

if not isinstance(target_host, Target):

target_host = Target(target_host)

if (

target_host.attrs.get("runtime", tvm.runtime.String("c++")) == "c"

and target_host.attrs.get("system-lib", 0) == 1

):

if target_host.kind.name == "c":

create_csource_crt_metadata_module = tvm._ffi.get_global_func(

"runtime.CreateCSourceCrtMetadataModule"

)

to_return = create_csource_crt_metadata_module([rt_mod_host], target_host)

elif target_host.kind.name == "llvm":

create_llvm_crt_metadata_module = tvm._ffi.get_global_func(

"runtime.CreateLLVMCrtMetadataModule"

)

to_return = create_llvm_crt_metadata_module([rt_mod_host], target_host)

else:

to_return = rt_mod_host

return OperatorModule.from_module(to_return, ir_module_by_target=target_input_mod, name=name)wasm_memory_init_with_pool(void *mem, unsigned int bytes)

{

mem_allocator_t _allocator = mem_allocator_create(mem, bytes);

if (_allocator) {

memory_mode = MEMORY_MODE_POOL;

pool_allocator = _allocator;

global_pool_size = bytes;

return true;

}

LOG_ERROR("Init memory with pool (%p, %u) failed.\n", mem, bytes);

return false;

}mem_allocator_t mem_allocator_create(void *mem, uint32_t size)

{

return gc_init_with_pool((char *) mem, size);

}gc_init_with_pool

The previous function invokes this one:

gc_handle_t

gc_init_with_pool(char *buf, gc_size_t buf_size)

{

char *buf_end = buf + buf_size;

char *buf_aligned = (char*)(((uintptr_t) buf + 7) & (uintptr_t)~7);

char *base_addr = buf_aligned + sizeof(gc_heap_t);

gc_heap_t *heap = (gc_heap_t*)buf_aligned;

gc_size_t heap_max_size;

if (buf_size < APP_HEAP_SIZE_MIN) {

os_printf("[GC_ERROR]heap init buf size (%u) < %u\n",

buf_size, APP_HEAP_SIZE_MIN);

return NULL;

}

base_addr = (char*) (((uintptr_t) base_addr + 7) & (uintptr_t)~7) + GC_HEAD_PADDING;

heap_max_size = (uint32)(buf_end - base_addr) & (uint32)~7;

return gc_init_internal(heap, base_addr, heap_max_size);

}and invokes the internal implementation:

static gc_handle_t

gc_init_internal(gc_heap_t *heap, char *base_addr, gc_size_t heap_max_size)

{

hmu_tree_node_t *root = NULL, *q = NULL;

int ret;

memset(heap, 0, sizeof *heap);

memset(base_addr, 0, heap_max_size);

ret = os_mutex_init(&heap->lock);

if (ret != BHT_OK) {

os_printf("[GC_ERROR]failed to init lock\n");

return NULL;

}

/* init all data structures*/

heap->current_size = heap_max_size;

heap->base_addr = (gc_uint8*)base_addr;

heap->heap_id = (gc_handle_t)heap;

heap->total_free_size = heap->current_size;

heap->highmark_size = 0;

root = &heap->kfc_tree_root;

memset(root, 0, sizeof *root);

root->size = sizeof *root;

hmu_set_ut(&root->hmu_header, HMU_FC);

hmu_set_size(&root->hmu_header, sizeof *root);

q = (hmu_tree_node_t *) heap->base_addr;

memset(q, 0, sizeof *q);

hmu_set_ut(&q->hmu_header, HMU_FC);

hmu_set_size(&q->hmu_header, heap->current_size);

hmu_mark_pinuse(&q->hmu_header);

root->right = q;

q->parent = root;

q->size = heap->current_size;

bh_assert(root->size <= HMU_FC_NORMAL_MAX_SIZE);

#if WASM_ENABLE_MEMORY_TRACING != 0

os_printf("Heap created, total size: %u\n", buf_size);

os_printf(" heap struct size: %u\n", sizeof(gc_heap_t));

os_printf(" actual heap size: %u\n", heap_max_size);

os_printf(" padding bytes: %u\n",

buf_size - sizeof(gc_heap_t) - heap_max_size);

#endif

return heap;

}PLDI'16

Hardware support for isolated execution (such as Intel SGX) enables development of applications that keep their code and data confidential even while running in a hostile or compromised host. However, automatically verifying that such applications satisfy confidentiality remains challenging. We present a methodology for designing such applications in a way that enables certifying their confidentiality. Our methodology consists of forcing the application to communicate with the external world through a narrow interface, compiling it with runtime checks that aid verification, and linking it with a small runtime that implements the narrow interface. The runtime includes services such as secure communication channels and memory management. We formalize this restriction on the application as Information Release Confinement (IRC), and we show that it allows us to decompose the task of proving confidentiality into (a) one-time, human-assisted functional verification of the runtime to ensure that it does not leak secrets, (b) automatic verification of the application's machine code to ensure that it satisfies IRC and does not directly read or corrupt the runtime's internal state. We present /CONFIDENTIAL: a verifier for IRC that is modular, automatic, and keeps our compiler out of the trusted computing base. Our evaluation suggests that the methodology scales to real-world applications.

Purpose

- Protect IRC: to prevent info leakage

- WCFI-RW: weak form of control-flow integrity, and along with restrictions on reads and writes

- Formally verify a set of checks to enforce WCFI-RW in SIRs

- Not to depend on a specific compiler, which is not in the TCB

Threat model

- Application U (in SIR) is not malicious but may contain bugs

- U may also use third-party libraries

- The system other than SIR is hostile/compromised

Design

- U and libraries(L) are verified separately

- U can only use L to

sendandrecvdata outside of SIR

Method

- Establish a formal model of U's syntax

- Define what an adversary can do under this threat model, formally

- Define the target (confidentiality)

- Deduce the properties required for WCFI-RW, both for applications and libraries

- Derive and instrument checks in code

- Verify the properties still hold.

Notice that

- These checks derived from the properties ensures WCFI-RW

- The verification may scale

Insights

- The library may also compromise the app

- No check of jump into the middle of instrumentation(s)

- Assumes the page permissions can be changed

- Only needs SP is in U???

Abstract

Recent proposals for trusted hardware platforms, such as Intel SGX and the MIT Sanctum processor, offer compelling security features but lack formal guarantees. We introduce a verification methodology based on a trusted abstract platform (TAP), a formalization of idealized enclave platforms along with a parameterized adversary. We also formalize the notion of secure remote execution and present machine-checked proofs showing that the TAP satisfies the three key security properties that entail secure remote execution: integrity, confidentiality and secure measurement. We then present machine-checked proofs showing that SGX and Sanctum are refinements of the TAP under certain parameterizations of the adversary, demonstrating that these systems implement secure enclaves for the stated adversary models.

Methodology

The enclave is formally modeled, including the program, state (e.g., memory, cache, registers), I/O, execution, and adversaries. The adversaries with privilege, cache access, and memory access are modeled differently.

A trusted abstract platform (TAP) is built for modeling secure remote execution (SRE), which is defined formally and can be meet by satisfying three properties at the same time: Secure Measurement, Integrity, Confidentiality.

Then, to prove that Sanctum and SGX satisfies SRE, the authors prove these architectures refine TAP in Boogie, and therefore meets SRE.

Strength

- Side-channel and Side-channel attackers are modeled

- TAP is a general model for various TEEs

- Formal proof

Weakness

- The refinement is not accurate enough, i.e., remote attestation seems missing

- Source code?

Abstract

In this paper we present a novel solution that combines the capabilities of Large Language Models (LLMs) with Formal Verification strategies to verify and automatically repair software vulnerabilities. Initially, we employ Bounded Model Checking (BMC) to locate the software vulnerability and derive a counterexample. The counterexample provides evidence that the system behaves incorrectly or contains a vulnerability. The counterexample that has been detected, along with the source code, are provided to the LLM engine. Our approach involves establishing a specialized prompt language for conducting code debugging and generation to understand the vulnerability's root cause and repair the code. Finally, we use BMC to verify the corrected version of the code generated by the LLM. As a proof of concept, we create ESBMC-AI based on the Efficient SMT-based Context-Bounded Model Checker (ESBMC) and a pre-trained Transformer model, specifically gpt-3.5-turbo, to detect and fix errors in C programs. Our experimentation involved generating a dataset comprising 1000 C code samples, each consisting of 20 to 50 lines of code. Notably, our proposed method achieved an impressive success rate of up to 80% in repairing vulnerable code encompassing buffer overflow and pointer dereference failures. We assert that this automated approach can effectively incorporate into the software development lifecycle's continuous integration and deployment (CI/CD) process.

Summarize

This paper interface LLM with BMC (Bounded model checking) to conform bugs in C code. BMC (based on SMT solver) improves the arithmetic performance, which is not good in LLM. However, I tested the cases which said to fail in LLM on GPT 4 (Ver Aug. 3) whereas it could find the vulnerability, and this is inconsistent with the paper.

The evaluation uses 1000 cases generated by LLM. It's still unclear if such methodology is applicable to very large code base/functions or on real world code base.

EuroSys'16 Best Paper

This paper supports the author's following work, graphene(-SGX).

Targeted Interfaces (APIs)

- System Calls

- Proc Filesystem

Method

- Measured the download and dependency data of packages in Ubuntu 15.04.

- Statically analyzed what syscalls will be invoked in a package and what path will be accessed by a binary (hardcoded string)

Measurement

- API Importance: A Metric for Individual APIs

- Weighted Completeness: A System-Wide Metric (combined with #downloads)

Questions Answered

- Which syscalls are more important than others?

- Which syscalls can be deprecated? (some are rarely used)

- Which syscalls should be supported for compatibility?

Abstract

Hardware-assisted memory encryption offers strong confidentiality guarantees for trusted execution environments like Intel SGX and AMD SEV. However, a recent study by Li et al. presented at USENIX Security 2021 has demonstrated the CipherLeaks attack, which monitors ciphertext changes in the special VMSA page. By leaking register values saved by the VM during context switches, they broke state-of-the-art constant-time cryptographic implementations, including RSA and ECDSA in the OpenSSL. In this paper, we perform a comprehensive study on the ciphertext side channels. Our work suggests that while the CipherLeaks attack targets only the VMSA page, a generic ciphertext side-channel attack may exploit the ciphertext leakage from any memory pages, including those for kernel data structures, stacks and heaps. As such, AMD’s existing countermeasures to the CipherLeaks attack, a firmware patch that introduces randomness into the ciphertext of the VMSA page, is clearly insufficient. The root cause of the leakage in AMD SEV’s memory encryption—the use of a stateless yet unauthenticated encryption mode and the unrestricted read accesses to the ciphertext of the encrypted memory—remains unfixed. Given the challenges faced by AMD to eradicate the vulnerability from the hardware design, we propose a set of software countermeasures to the ciphertext side channels, including patches to the OS kernel and cryptographic libraries. We are working closely with AMD to merge these changes into affected open-source projects.

Background

- CipherLeak: ciphertext can be accessed by the hypervisor

- In SEV, XEX encryption mode is applied => for a fixed address, same plaintext yields same ciphertext

- Controlled side-channel: NPT (nested page table) present bit clear => PF

- Before SEV-ES, the registers are saved without encryption

- SNP: hypervisor cannot modify or remap guest VM pages (integrity protection)

Attack

- Nginx SSL key generation -> 384bit ECDSA key recovery

This exploits constant time swap algorithm. A decision bit encryption pattern is observed, and therefore the nonce could be derived by observing the mask in 384 iterations.

This paper analyzes the vulnerability space arising in Trusted Execution Environments (TEEs) when interfacing a trusted enclave application with untrusted, potentially malicious code. Considerable research and industry effort has gone into developing TEE runtime libraries with the purpose of transparently shielding enclave application code from an adversarial environment. However, our analysis reveals that shielding requirements are generally not well-understood in real-world TEE runtime implementations. We expose several sanitization vulnerabilities at the level of the Application Binary Interface (ABI) and the Application Programming Interface (API) that can lead to exploitable memory safety and side-channel vulnerabilities in the compiled enclave. Mitigation of these vulnerabilities is not as simple as ensuring that pointers are outside enclave memory. In fact, we demonstrate that state-of-the-art mitigation techniques such as Intel's edger8r, Microsoft's "deep copy marshalling", or even memory-safe languages like Rust fail to fully eliminate this attack surface. Our analysis reveals 35 enclave interface sanitization vulnerabilities in 8 major open-source shielding frameworks for Intel SGX, RISC-V, and Sancus TEEs. We practically exploit these vulnerabilities in several attack scenarios to leak secret keys from the enclave or enable remote code reuse. We have responsibly disclosed our findings, leading to 5 designated CVE records and numerous security patches in the vulnerable open-source projects, including the Intel SGX-SDK, Microsoft Open Enclave, Google Asylo, and the Rust compiler.

ABI vulnerabilities

- Entry status flags sanitization

- Entry stack pointer restore

- Exit register leakage

API vulnerabilities

- Missing pointer range check

- Null-terminated string handling

- Integer overflow in range check

- Incorrect pointer range check

- Double fetch untrusted pointer

- Ocall return value not checked

- Uninitialized padding leakage

Targets

- SGX-SDK

- OpenEnclave

- Graphene

- SGX-LKL

- Rust-EDP

- Asylo

- Keystone

- Sancus

that legacy applications may also make implicit assumptions on the validity of argv and envp pointers, which are not the result of system calls.

WebAssembly in SGX

Remote computation has numerous use cases such as cloud computing, client-side web applications or volunteer computing. Typically, these computations are executed inside a sandboxed environment for two reasons: first, to isolate the execution in order to protect the host environment from unauthorised access, and second to control and restrict resource usage. Often, there is mutual distrust between entities providing the code and the ones executing it, owing to concerns over three potential problems: (i) loss of control over code and data by the providing entity, (ii) uncertainty of the integrity of the execution environment for customers, and (iii) a missing mutually trusted accounting of resource usage.

In this paper we present AccTEE, a two-way sandbox that offers remote computation with resource accounting trusted by consumers and providers. AccTEE leverages two recent technologies: hardware-protected trusted execution environments, and Web-Assembly, a novel platform independent byte-code format. We show how AccTEE uses automated code instrumentation for fine-grained resource accounting while maintaining confidentiality and integrity of code and data. Our evaluation of AccTEE in three scenarios – volunteer computing, serverless computing, and pay-by-computation for the web – shows a maximum accounting overhead of 10%.

sec'20

Abstract

Content Delivery Networks (CDNs) serve a large and increasing portion of today’s web content. Beyond caching, CDNs provide their customers with a variety of services, including protection against DDoS and targeted attacks. As the web shifts from HTTP to HTTPS, CDNs continue to provide such services by also assuming control of their customers’ private keys, thereby breaking a fundamental security principle: private keys must only be known by their owner.

We present the design and implementation of Phoenix, the first truly “keyless CDN”. Phoenix uses secure enclaves (in particular Intel SGX) to host web content, store sensitive key material, apply web application firewalls, and more on otherwise untrusted machines. To support scalability and multitenancy, Phoenix is built around a new architectural primitive which we call conclaves: containers of enclaves. Conclaves make it straightforward to deploy multi-process, scalable, legacy applications. We also develop a filesystem to extend the enclave’s security guarantees to untrusted storage. In its strongest configuration, Phoenix reduces the knowledge of the edge server to that of a traditional on-path HTTPS adversary. We evaluate the performance of Phoenix with a series of micro- and macro-benchmarks.

Challenges

- Support for multi-tenants

- Legacy App

- Bootstrapping trust

Comments

- Scalable

- Curious/Byzantine Threat Model

- All Goals achieved separately by previous work

chmod 600 ./id_rsa*

# or

chmod 644 ./id_rsa.pub

eval $(ssh-agent -s)

ssh-add ~/.ssh/id_rsaAbstract

Memory access bugs, including buffer overflows and uses of freed heap memory, remain a serious problem for programming languages like C and C++. Many memory error detectors exist, but most of them are either slow or detect a limited set of bugs, or both.

This paper presents AddressSanitizer, a new memory error detector. Our tool finds out-of-bounds accesses to heap, stack, and global objects, as well as use-after-free bugs. It employs a specialized memory allocator and code instrumentation that is simple enough to be implemented in any compiler, binary translation system, or even in hardware.

AddressSanitizer achieves efficiency without sacrificing comprehensiveness. Its average slowdown is just 73% yet it accurately detects bugs at the point of occurrence. It has found over 300 previously unknown bugs in the Chromium browser and many bugs in other software.

Goods

- Tracking of valid memory via shadow mapping

- Checks done via instrumentation

- Stack/heal object awareness

- No FP

Bads

- Still mild overhead

- Can miss some bugs (redzones might be skipped)

Questions

- Why this work is SO influential?

USENIX'20

Kernel-mode drivers are challenging to analyze for vulnerabilities, yet play a critical role in maintaining the security of OS kernels. Their wide attack surface, exposed via both the system call interface and the peripheral interface, is often found to be the most direct attack vector to compromise an OS kernel. Researchers therefore have proposed many fuzzing techniques to find vulnerabilities in kernel drivers. However, the performance of kernel fuzzers is still lacking, for reasons such as prolonged execution of kernel code, interference between test inputs, and kernel crashes.

This paper proposes lightweight virtual machine checkpointing as a new primitive that enables high-throughput kernel driver fuzzing. Our key insight is that kernel driver fuzzers frequently execute similar test cases in a row, and that their performance can be improved by dynamically creating multiple checkpoints while executing test cases and skipping parts of test cases using the created checkpoints. We built a system, dubbed Agamotto, around the virtual machine checkpointing primitive and evaluated it by fuzzing the peripheral attack surface of USB and PCI drivers in Linux. The results are convincing. Agamotto improved the performance of the state-of-the-art kernel fuzzer, Syzkaller, by 66.6% on average in fuzzing 8 USB drivers, and an AFL-based PCI fuzzer by 21.6% in fuzzing 4 PCI drivers, without modifying their underlying input generation algorithm.

Design

- Using checkpoints to store state and restore the state => accelerate fuzzing

Papers

Confidential Computing within an AI Accelerator

We present IPU Trusted Extensions (ITX), a set of hardware extensions that enables trusted execution environments in Graphcore’s AI accelerators. ITX enables the execution of AI workloads with strong confidentiality and integrity guarantees at low performance overheads. ITX isolates workloads from untrusted hosts, and ensures their data and models remain encrypted at all times except within the accelerator’s chip. ITX includes a hardware root-of-trust that provides attestation capabilities and orchestrates trusted execution, and on-chip programmable cryptographic engines for authenticated encryption of code/data at PCIe bandwidth.

We also present software for ITX in the form of compiler and runtime extensions that support multi-party training without requiring a CPU-based TEE.

We included experimental support for ITX in Graphcore’s GC200 IPU taped out at TSMC’s 7nm node. Its evaluation on a development board using standard DNN training workloads suggests that ITX adds < 5% performance overhead and delivers up to 17x better performance compared to CPU-based confidential computing systems based on AMD SEV-SNP.

Attack: Function (Call) sequence can be modified by an untrusted attacker.

Solution: hash chain built by trusted functions, which can be verified by the user.

Not a very interesting paper.

Abstract

Recent advances of Large Language Models (LLMs), e.g., ChatGPT, exhibited strong capabilities of comprehending and responding to questions across a variety of domains. Surprisingly, ChatGPT even possesses a strong understanding of program code. In this paper, we investigate where and how LLMs can assist static analysis by asking appropriate questions. In particular, we target a specific bug-finding tool, which produces many false positives from the static analysis. In our evaluation, we find that these false positives can be effectively pruned by asking carefully constructed questions about function-level behaviors or function summaries. Specifically, with a pilot study of 20 false positives, we can successfully prune 8 out of 20 based on GPT-3.5, whereas GPT-4 had a near-perfect result of 16 out of 20, where the four failed ones are not currently considered/supported by our questions, e.g., involving concurrency. Additionally, it also identified one false negative case (a missed bug). We find LLMs a promising tool that can enable a more effective and efficient program analysis.

Noticeable Points

Prompt Design

- Chain of Thought: Step-by-step result

- Task decomposition: breaking down the huge tasks into smaller pieces

- Progressive prompt: interactively feed information

Challenges in traditional code analysis

- Inherent Knowledge Boundaries.

Static analysis requires domain knowledge to model special functions which cannot be analyzed. E.g., assembly code, hardware behaviors, concurrency, and compiler built-ins.

- Exhaustive Path Exploration.

Research Papers

Attacks

- A New Era in LLM Security: Exploring Security Concerns in Real-World LLM-based Systems

- A First Look at GPT Apps: Landscape and Vulnerability steals system prompt/files

- LLM Platform Security: Applying a Systematic Evaluation Framework to OpenAI’s ChatGPT Plugins

- Demystifying RCE Vulnerabilities in LLM-Integrated Apps RCE based on prompts

Defense

MISC

Previous Attack

Jailbreak

Images

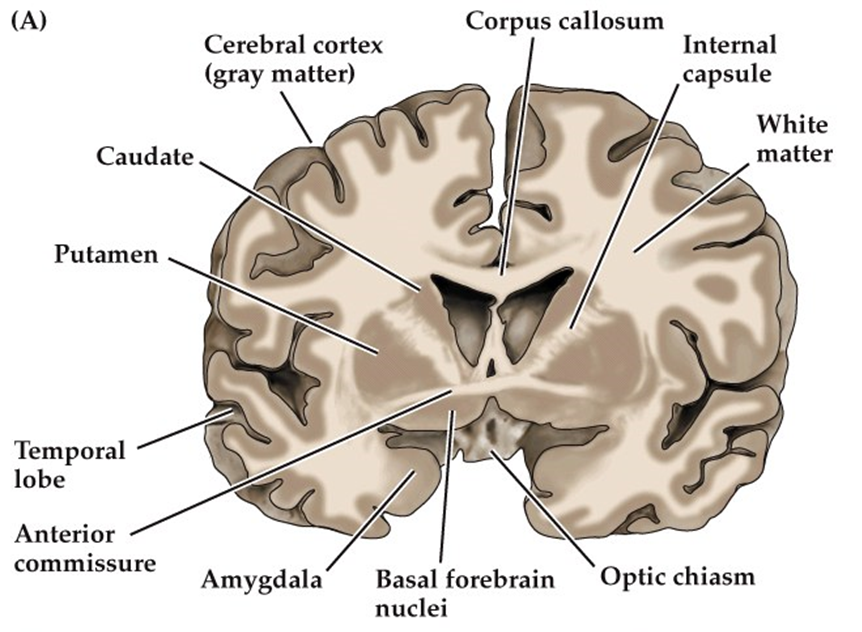

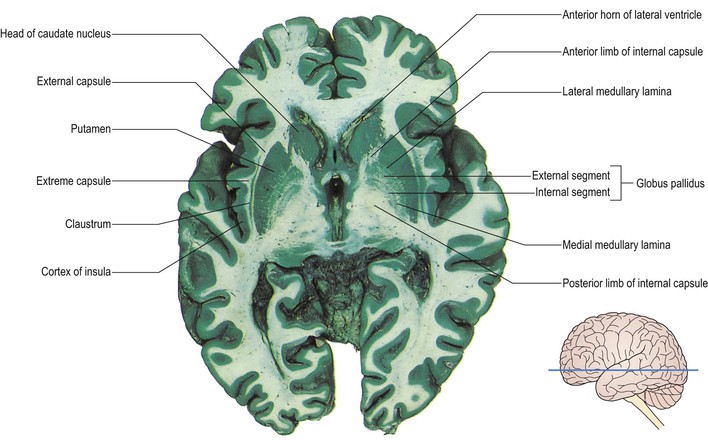

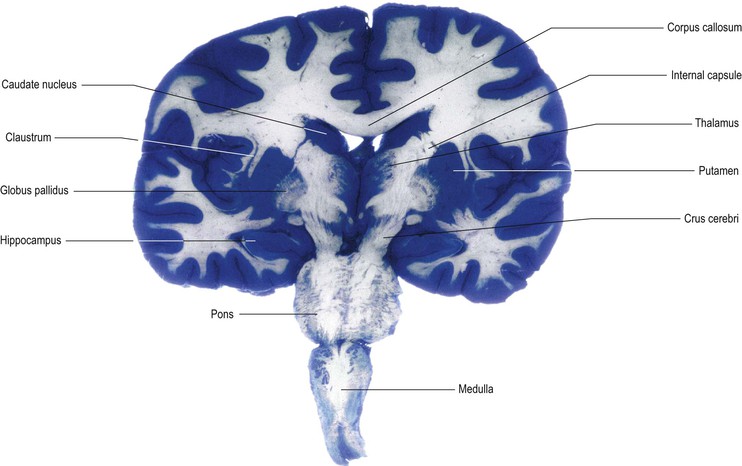

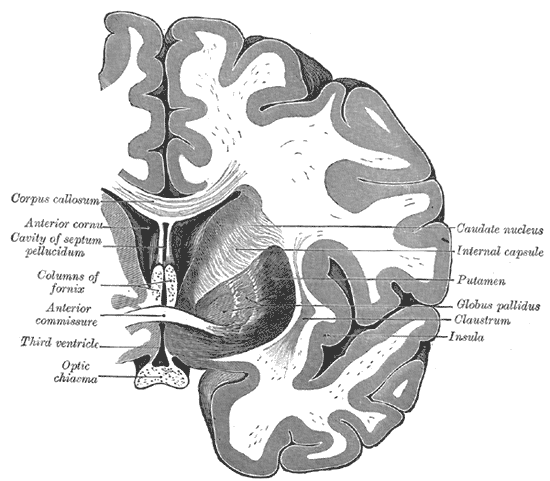

Functions of Caudate

Visual

- Anterior portion of caudate connected with lateral and medial prefrontal cortices and involved with working memory and executive function (cognitive and emotional portion)

- Tail of caudate interacts with inferior temporal lobe to process visual information and movement control (lesions can impair visual discrimination of objects).

- Receives topographic visual input from cortex, integrates it and inhibits substantia nigra, which disinhibits the superior colliculus to enable coordinated eye movements.

Associative learning

- Connecting visual stimuli with motor responses and learning with feedback

- Body and tail are associated with learning acquisition, while to head is involved with processing feedback on learning trials.

- Right caudate has numerous connections with hippocampus that correlates with memory competitions

- Medial dorsal striatum involved with working memory, and formation of certain memories.

Clinical Significance

- Degree of dementia and performance in PD correlates with loss of dopaminergic neurons projecting to caudate.

- Early deficits in working memory in PD associated with caudate dysfunction.

- Decreases in caudate bloodflow associated with cognitive impairment seen in HIV.

- Volume of caudate decreased in several forms of dementia (less in AD than other types of dementia)

- Impulse control and hyperactivity roles: activity increased in manic state of bipolar; left caudate volume correlates with severity of obsessive-compulsive disorder. Volumetric differences in those with ADHD compared with controls.

- Schizophrenia: volumes are reduced, and increase with drug threrapy.

Putamen

- Learning and motor control, including speech articulation, reward, addiction

- Susceptible to infarction because of vasculature

- Dopamine depletion results in many symptoms of PD

- Changes in volume linked to bipolar, Tourette syndrome, ADHD, ASD, schizophrenia.

- Greater volume in bilinguals than monolinguals

- Volume inversely correlated with emotion recognition in faces

- Involved with mother’s recognition of own infant.

Substantia Nigra

- Midbrain dopaminergic nucleus

- Modulates motor function and reward

- Projections from SN to putamen (the nigrostriatal pathway) is highly implicated in PD

- SN divided into two subsections: the pars compacta (SNpc) and the pars reticulata (SNpr)

- SNpc contains dominergic neurons: dark in color because of high amount of neuromelanin which forms the precursor to dopamine (L-dopa).

- SNpr contains inhibitory GABA neurons

Direct and Indirect pathways of the Basal Ganglia

- SNpc has Dopaminergic projections to striatum, these synapse on D1 DA receptors and D2 DA receptors.

- The D1 receptors in striatum synapse on internal Globus Pallidus forming the direct pathway of the basal ganglia.

- The D2 neurons project to external GP which inhibit the stimulatory subthalamic nuclei that synapse on the GPi: the indirect pathway.

- Indirect pathway indirectly stimulates the Gpi, whereas the direct pathway directly inhibits the Gpi.

Filesystem + Formal Method + SGX

New trusted computing primitives such as Intel SGX have shown the feasibility of running user-level applications in enclaves on a commodity trusted processor without trusting a large OS. However, the OS can still compromise the integrity of an enclave by tampering with the system call return values. In fact, it has been shown that a subclass of these attacks, called Iago attacks, enables arbitrary logic execution in enclave programs. Existing enclave systems have very large TCB and they implement ad-hoc checks at the system call interface which are hard to verify for completeness. To this end, we present BesFS—the first filesystem interface which provably protects the enclave integrity against a completely malicious OS. We prove 167 lemmas and 2 key theorems in 4625 lines of Coq proof scripts, which directly proves the safety properties of the BesFS specification. BesFS comprises of 15 APIs with compositional safety and is expressive enough to support 31 real applications we test. BesFS integrates into existing SGX-enabled applications with minimal impact to TCB. BesFS can serve as a reference implementation for hand-coded API checks.

SGX to protect GPU then prevent cheating

Abstract

Online gaming, with a reported 152 billion US dollar market, is immensely popular today. One of the critical issues in multiplayer online games is cheating, in which a player uses an illegal methodology to create an advantage beyond honest game play. For example, wallhacks, the main focus of this work, animate enemy objects on a cheating player's screen, despite being actually hidden behind walls (or other occluding objects). Since such cheats discourage honest players and cause game companies to lose revenue, gaming companies deploy mitigation solutions alongside game applications on the player's machine. However, their solutions are fundamentally flawed since they are deployed on a machine where the attacker has absolute control.

Images

Cerebellum

1. Vermis. 2. Superior cerebellar peduncle (red). 3. Middle cerebellar peduncle (blue). 4. Inferior cerebellar peduncle (green). 5. Nodulus. 6. Flocculus. 7. Posterolateral fissure. 8. Cerebellar tonsils.

Medulla

Midbrain

Pons

References:

Build

Dependencies

sudo apt install build-essential libncurses-dev bison flex libssl-dev libelf-dev

sudo apt install dwarves zstdConfig

# Using current config

cp -v /boot/config-$(uname -r) .config

make menuconfigNote that CONFIG_SYSTEM_TRUSTED_KEYS= and CONFIG_SYSTEM_REVOCATION_KEYS may need to be set into "" in .config file, and CONFIG_X86_X32 may not be supported.

Build & Install

make -j32

sudo make modules_install

sudo make install

sudo update-grubUninstall

Delete what have been installed in several places:

- image:

/boot/*[kernel_ver] - modules:

/lib/modules/[kernel_ver]

SoCC'23

System

- Trust TPM & Secure Boot

- Security Monitor implemented at VMX-root level, and the model(with implementation) are formally verified.

- Enforce access control by trapping instrcuctions

Covered Attacks Surfaces

- DMA buffer

- MMIO mapping

- GPU context

Attack vectors targeting these surfaces:

- Malicious peripherals

- Concurrent CPU Access (specific to GEVisor)

Question

- How to ensure the integrity of Hypercalls? (i.e., protect the ring buffer)

- How to prove active attackers would be detected by GEVisor? (and how to define active attackers?)

- Why does the formal verification pass for GEVisor with flaws? (or what does pass mean for GEVisor?)

After Reboot

# On Host

sudo modprobe vfio-pci

sudo sh -c "echo 10de 2331 > /sys/bus/pci/drivers/vfio-pci/new_id"

# Optionally

sudo python3 ./nvidia_gpu_tools.py --gpu-name=H100 --set-cc-mode=devtools --reset-after-cc-mode-switch

# Launch VM; In VM

sudo nvidia-smi conf-compute -srs 1NDSS'21

Abstract

Intel SGX aims to provide the confidentiality of user data on untrusted cloud machines. However, applications that process confidential user data may contain bugs that leak information or be programmed maliciously to collect user data. Existing research that attempts to solve this problem does not consider multi-client isolation in a single enclave. We show that by not supporting such isolation, they incur considerable slowdown when concurrently processing multiple clients in different processes, due to the limitations of SGX.

This paper proposes CHANCEL, a sandbox designed for multi-client isolation within a single SGX enclave. In particular, CHANCEL allows a program’s threads to access both a per-thread memory region and a shared read-only memory region while servicing requests. Each thread handles requests from a single client at a time and is isolated from other threads, using a Multi-Client Software Fault Isolation (MCSFI) scheme. Furthermore, CHANCEL supports various in-enclave services such as an in-memory file system and shielded client communication to ensure complete mediation of the program’s interactions with the outside world. We implemented CHANCEL and evaluated it on SGX hardware using both micro-benchmarks and realistic target scenarios, including private information retrieval and product recommendation services. Our results show that CHANCEL outperforms a baseline multi-process sandbox between 4.06−53.70× on micro-benchmarks and 0.02 − 21.18× on realistic workloads while providing strong security guarantees.

Motivation & Target & Model

- Potentially malicious binary

- Binary/clients cannot leak confidential data

- Clients cannot compromise others' threads

- Multi-client isolation in a single enclave

Design & Method

- In-memory FS, client communication, memory management

- No additional hardware requirements (such as MPX/SGX2)

- Reserves two registers:

r14,r15 - Provide a toolchain to generate instrumented binary

Protections

- Chancel includes comprehensive evaluations on real-world tasks and benchmarks

- ASPLOS 2020

- Paper

- Soruce Code

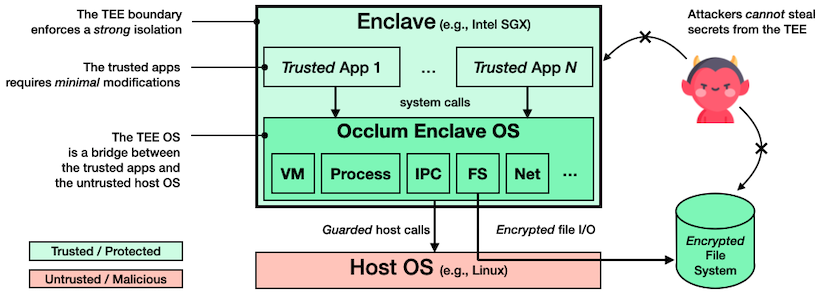

Intel SGX is a hardware-based trusted execution environment (TEE), which enables an application to compute on confidential data in a secure enclave. SGX assumes a powerful threat model, in which only the CPU itself is trusted; anything else is untrusted, including the memory, firmware, system software, etc. An enclave interacts with its host application through an exposed, enclave-specific, (usually) bi-directional interface. This interface is the main attack surface of the enclave. The attacker can invoke the interface in any order and inputs. It is thus imperative to secure it through careful design and defensive programming.

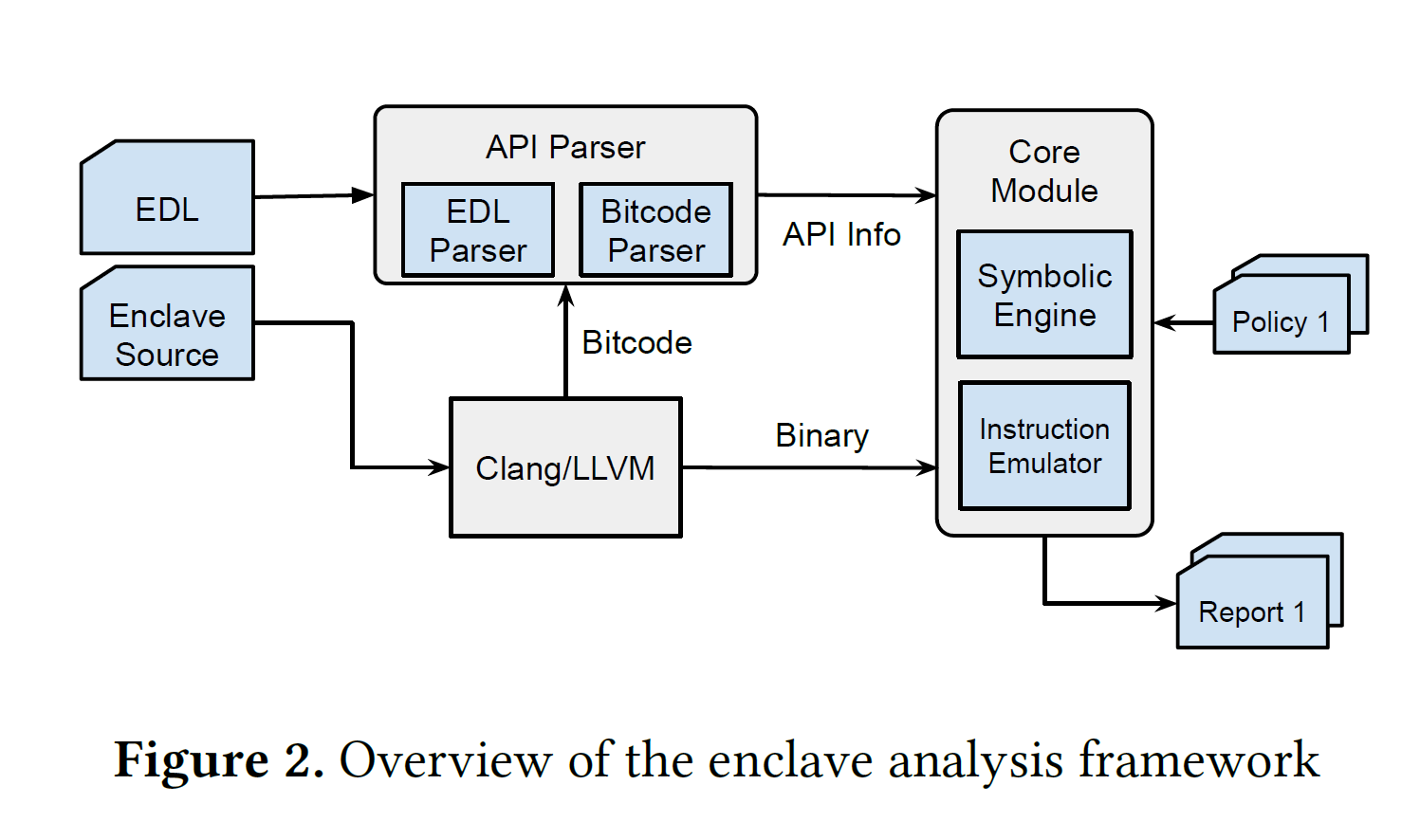

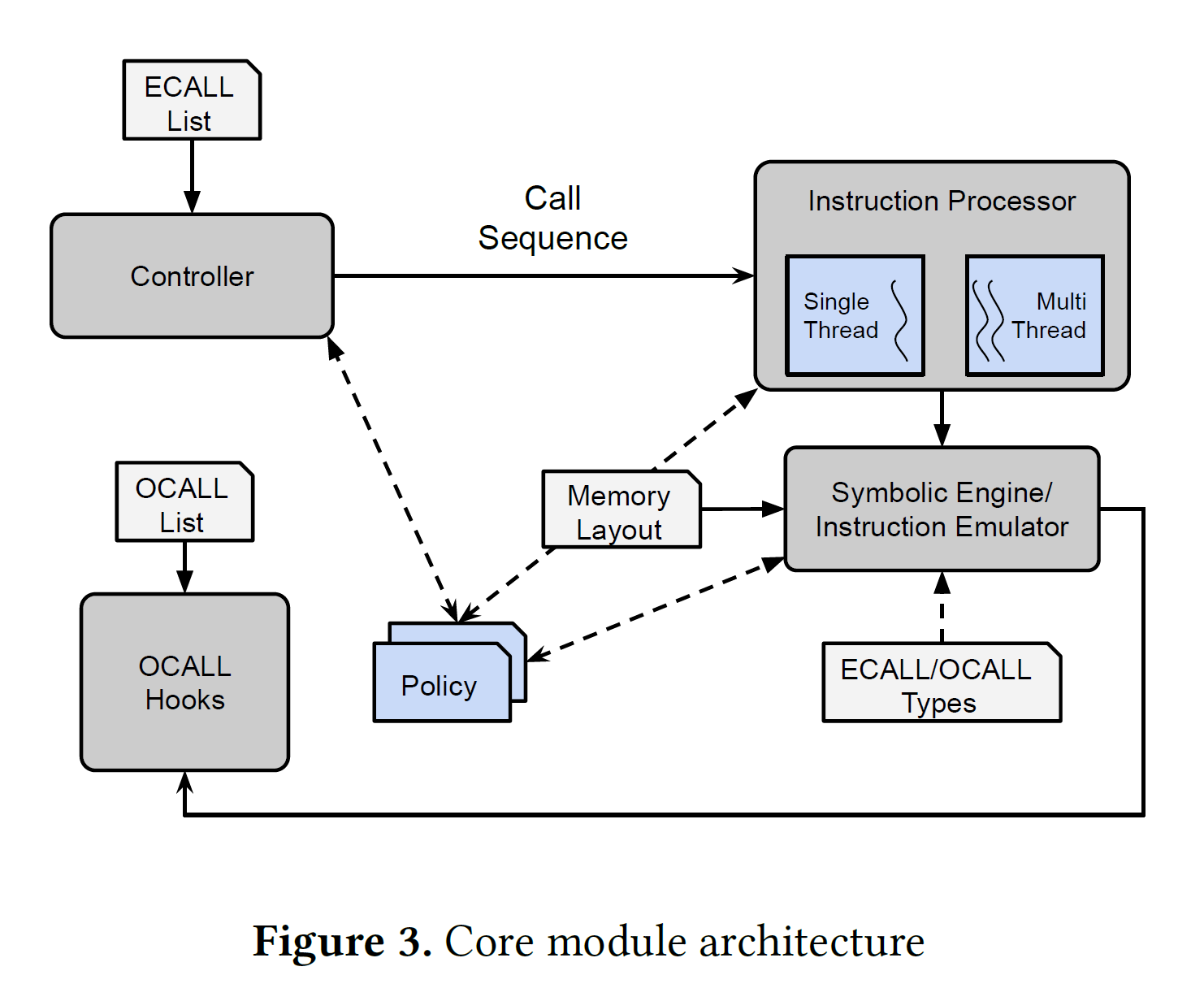

In this work, we systematically analyze the attack models against the enclave untrusted interfaces and summarized them into the COIN attacks -- Concurrent, Order, Inputs, and Nested. Together, these four models allow the attacker to invoke the enclave interface in any order with arbitrary inputs, including from multiple threads. We then build an extensible framework to test an enclave in the presence of COIN attacks with instruction emulation and concolic execution. We evaluated ten popular open-source SGX projects using eight vulnerability detection policies that cover information leaks, control-flow hijackings, and memory vulnerabilities. We found 52 vulnerabilities. In one case, we discovered an information leak that could reliably dump the entire enclave memory by manipulating the inputs. Our evaluation highlights the necessity of extensively testing an enclave before its deployment.

Target

To find vulnerabilities of SGX applications in four models:

- Concurrent calls: multithread, race condition, improper lock...

- Order: assumes the calling sequence

- Input manipulation: bad OCALL return val & ECALL arguments

- Nested calls: calling OCALL that invokes ECALL, not implemented

Design & Method

The design of COIN:

- Emulation: QEMU

- Symbolic execution: Triton (backed by z3)

- Policy-based vulnerability discovery

Results

COIN uses 8 policies to find the potential vulnerabilities:

- Heap info leak

- Stack info leak

- Ineffectual condition

- Use after free

- Double free

- Stack overflow

- Heap overflow

- Null pointer dereference

COIN Attack

- ASPLOS 2020

- Paper

- Soruce Code

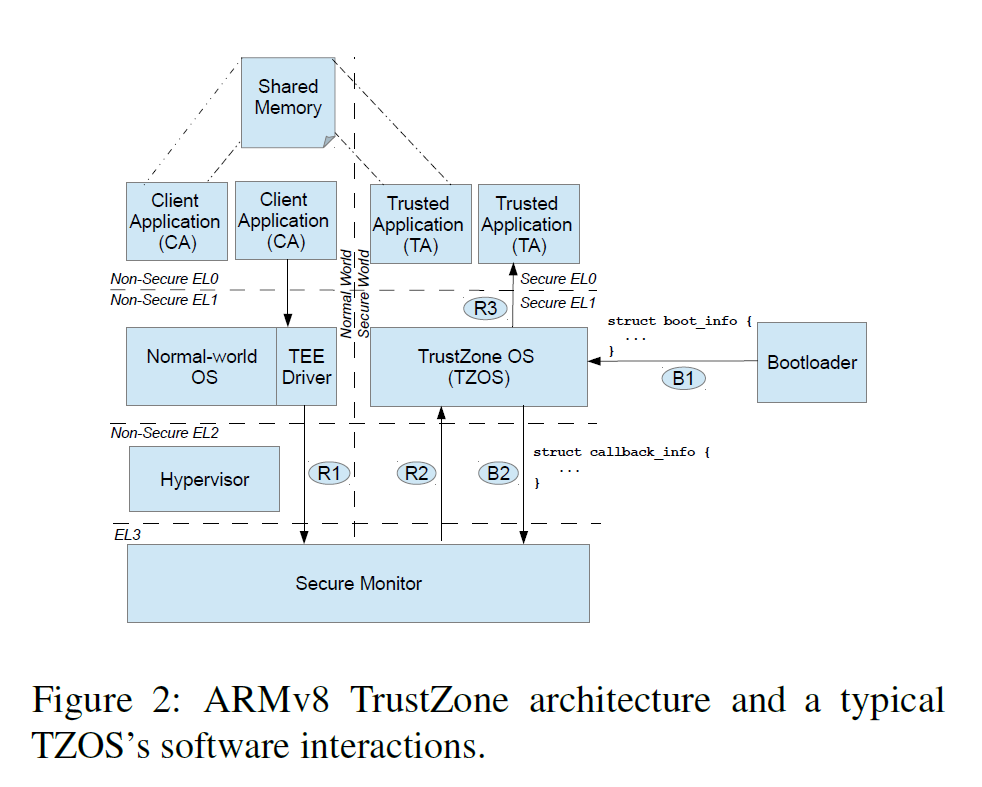

Target

- Emulate TrustZone OSes(TZOS) and Trusted Applications (TAs)

- Abstract and reimplement a subset of hardware/software interfaces

- Fuzz these components

- TZOSes: QSEE, Huawei, OPTEE, Kinibi, TEEGRIS(Samsung) & TAs

Results

COIN uses 8 policies to find the potential vulnerabilities:

- Heap info leak

- Stack info leak

- Ineffectual condition

- Use after free

- Double free

- Stack overflow

- Heap overflow

- Null pointer dereference

Review

Strength

- Symbolic execution + emulation

- Policies can be configurable

- Real world problems

- Solid work

- Efforts taken to run TZOS and TA in emulation environment

- Acceptable performance

Weakness

- nested call left unimplemented

- May not be powerful enough to deal with complicate situations

- Policies are mainly at relatively low-level

- Low coverage

- Crashes -X-> vulnerabilities

PartEmu

- USENIX Security 2020

- Paper

- Source code unavailable

Traditional TrustZone OSes and Applications is not easy to fuzz because they cannot be instrumented or modified easily in the original hardware environment. So to emulate them for fuzzing purpose.

Design & Method

- Re-host the TZOS frimware

- Choose the components to reuse/emulate carefully

- Bootloader

- Secure Monitor

- TEE driver and TEE userspace

- MMIO registers (easy to emulate)

Tools

- TriforceAFL + QEMU

- Manually written Interfaces

Results

Emulations works well. For upgraded TZOSes, only a few efforts are needed for compatibility.

TAs

Challenges

- Identifying the fuzzed target

- Result stability (migrate to hardware, reproducibility)

- Randomness

| Class | Vulnerability Types | Crashes |

|---|---|---|

| Availability | Null-pointer dereferences | 9 |

| Insufficient shared memory crashes | 10 | |

| Other | 8 | |

| Confidentiality | Read from attacker-controlled pointer to shared memory | 8 |

| Read from attacker-controlled | 0 | |

| OOB buffer length to shared memory | ||

| Integrity | Write to secure memory using attacker-controlled pointer | 11 |

| Write to secure memory using | 2 | |

| attacker-controlled OOB buffer length |

Just like the previous paper, the main causes of the crashes can be attributed to:

- Assumptions of Normal-World Call Sequence

- Unvalidated Pointers from Normal World

- Unvalidated Types

TZOSes

- Normal-World Checks

- Assumptions of Normal-World Call Sequence

Abstract

We present a compiler-based scheme to protect the confidentiality of sensitive data in low-level applications (e.g. those written in C) in the presence of an active adversary. In our scheme, the programmer marks sensitive data by lightweight annotations on the top-level definitions in the source code. The compiler then uses a combination of static dataflow analysis, runtime instrumentation, and a novel taint-aware form of control-flow integrity to prevent data leaks even in the presence of low-level attacks. To reduce runtime overheads, the compiler uses a novel memory layout.

We implement our scheme within the LLVM framework and evaluate it on the standard SPEC-CPU benchmarks, and on larger, real-world applications, including the NGINX webserver and the OpenLDAP directory server. We find that the performance overheads introduced by our instrumentation are moderate (average 12% on SPEC), and the programmer effort to port the applications is minimal.

Methodology

Key insight: complete memory safety and perfect control-flow integrity (CFI) are neither sufficient nor necessary for preventing data leaks.

- Compiler performs dataflow analysis: analyzer infer expected type of taint

- Different types are placed into two separate regions

- Not requiring alias analysis

- Exclude the compiler from the TCB via a tiny verifier

Abstract

The constant-time discipline is a software-based countermeasure used for protecting high assurance cryptographic implementations against timing side-channel attacks. Constant-time is effective (it protects against many known attacks), rigorous (it can be formalized using program semantics), and amenable to automated verification. Yet, the advent of micro-architectural attacks makes constant-time as it exists today far less useful.

This paper lays foundations for constant-time programming in the presence of speculative and out-of-order execution. We present an operational semantics and a formal definition of constant-time programs in this extended setting. Our semantics eschews formalization of microarchitectural features (that are instead assumed under adversary control), and yields a notion of constant-time that retains the elegance and tractability of the usual notion. We demonstrate the relevance of our semantics in two ways: First, by contrasting existing Spectre-like attacks with our definition of constant-time. Second, by implementing a static analysis tool, Pitchfork, which detects violations of our extended constant-time property in real world cryptographic libraries.

Methodology

- A model to incorporate speculative execution.

- Abstract machine: fetch, execute, retire.

- Reorder buffer => out-of-order and speculative execution

- Directives can be initiated to model the detailed schedule of the reordered micro-ops

- Observations are also modeled in such process to mimic the leakage visible to the attacker

- Modeled instructions:

op,fence,load,store,br,call,ret

Artifact

- Symbolic execution based on angr

- Schedule the worst case reorder buffer => maximize potential leakage

- Can indeed found threat

Comments

- Are the threats confirmed by the developers?

- Is the model formalized in a proof checker?

- Execution time of instructions is not modeled? (or need not to be modeled?)

The presence of large numbers of security vulnerabilities in popular feature-rich commodity operating systems has inspired a long line of work on excluding these operating systems from the trusted computing base of applications, while retaining many of their benefits. Legacy applications continue to run on the untrusted operating system, while a small hyper visor or trusted hardware prevents the operating system from accessing the applications' memory. In this paper, we introduce controlled-channel attacks, a new type of side-channel attack that allows an untrusted operating system to extract large amounts of sensitive information from protected applications on systems like Overshadow, Ink Tag or Haven. We implement the attacks on Haven and Ink Tag and demonstrate their power by extracting complete text documents and outlines of JPEG images from widely deployed application libraries. Given these attacks, it is unclear if Over shadow's vision of protecting unmodified legacy applications from legacy operating systems running on off-the-shelf hardware is still tenable.

The key intuition is to exploit the fact that a regular applicationusually shows different patterns in control transfers or data accesses when the sensitive data it is processing are different.

- Induce Page Fault

- Recognize page access -> control/data flow

- Recover sensitive info

Mitigation

Source: Wikipedia

Images

Table from Wiki

| 编号 | 名称 | 性质 | 连脑部位 | 进出颅腔部位 | 核团 | 功能 |

|---|---|---|---|---|---|---|

| 0 | 终末神经 | ? | 終板 | 篩板 | 中隔內核(Septal nuclei) | 和費洛蒙的感測有關 |

| I | 嗅神经 | 感觉性 | 端脑 | 篩板 | 嗅前核(Anterior olfactory nucleus) | 传递嗅觉信息 |

| II | 視神經 | 感觉性 | 间脑 | 視神經管(Optic canal) | 视网膜神经节细胞[5] | 向大脑传递视觉信息 |

| III | 动眼神经 | 运动性 | 中脑前部 | 眶上裂(Superior orbital fissure) | 动眼神经核(Oculomotor nucleus) | 支配上瞼舉肌(英语:Levator palpebrae superioris),上直肌、内直肌、下直肌和下斜肌,来协同完成眼球的运动;支配瞳孔括约肌和睫状体的收缩。 |

| IV | 滑车神经 | 运动性 | 中脑后部 | 眶上裂 | 滑车神经核(Trochlear nucleus) | 支配上斜肌(Superior oblique muscle),来控制眼球的水平或者汇聚运动 |

| V | 三叉神经 | 混合性 | 橋腦 | 眶上裂(眼神经),圆孔(上颌神经),卵圆孔(下颌神经) | 三叉神经核感觉主核,三叉神经脊束核,中脑三叉神经核,三叉神经运动核 | 接受面部的感觉输入;支配咀嚼肌的收缩 |

| VI | 外旋神經 | 运动性 | 橋腦前缘 | 眶上裂 | 外展神经核 | 支配外直肌 |

| VII | 顏面神經 | 混合性 | 橋腦(橄榄核之上桥小脑角部位) | 内耳道、莖乳突孔(Stylomastoid foramen) | 面神经核、孤束核、上涎神经核 | 接收舌肌前三分之二部位的感觉输入;支配面部表情肌、二腹肌、镫骨肌;支配唾液腺和泪腺的分泌。 |

| VIII | 前庭耳蝸神經 | 感觉性 | 橋腦 | 内耳道 | 前庭神经核、耳蜗核 | 接受声音、旋转、重力(对保持平衡和运动非常重要)的感觉输入。前庭分支和耳蜗分支主要传递听觉。 |

| IX | 舌咽神经 | 混合性 | 延腦 | 頸靜脈孔(Jugular foramen) | 疑核、下涎核、孤束核 | 接受舌部后三分之一的感觉输入;部分感觉经腭扁桃体传递到脑;支配腮腺的分泌;支配茎突的运动。 |

| X | 迷走神经 | 混合性 | 延腦 | 頸靜脈孔 | 疑核、背运动迷走神经核、孤束核 | 接受来自会咽的特殊味觉输入;支配喉部肌肉和咽肌(有舌咽神经支配的茎突除外);提供了几乎所有的胸、腹部和内臟的副交感神经纤维。主要功能:控制发声肌肉、软腭和共振。损害症状:吞咽困难與腭咽闭合不全。 |

| XI | 副神经 | 运动性 | 延腦 | 頸靜脈孔 | 孤束核、脊髓副神经核 | 支配胸锁乳突肌与斜方肌,与迷走神经(CN X)功能有部分重叠。损害症状:不能耸肩,头部运动变弱。 |

| XII | 舌下神经 | 混合性 | 延腦 | 舌下神经管(Hypoglossal foramen) | 舌下神经核 | 支配舌部肌肉的运动(由迷走神经支配的舌腭肌除外);对吞咽和语音清晰度非常重要。舌部的肌肉的感覺。 |

Gyri and Sulci

As control-flow hijacking defenses gain adoption, it is important to understand the remaining capabilities of adversaries via memory exploits. Non-control data exploits are used to mount information leakage attacks or privilege escalation attacks program memory. Compared to control-flow hijacking attacks, such non-control data exploits have limited expressiveness, however, the question is: what is the real expressive power of non-control data attacks? In this paper we show that such attacks are Turing-complete. We present a systematic technique called data-oriented programming (DOP) to construct expressive non-control data exploits for arbitrary x86 programs. In the experimental evaluation using 9 programs, we identified 7518 data-oriented x86 gadgets and 5052 gadget dispatchers, which are the building blocks for DOP. 8 out of 9 real-world programs have gadgets to simulate arbitrary computations and 2 of them are confirmed to be able to build Turing-complete attacks. We build 3 end-to-end attacks to bypass randomization defenses without leaking addresses, to run a network bot which takes commands from the attacker, and to alter the memory permissions. All the attacks work in the presence of ASLR and DEP, demonstrating how the expressiveness offered by DOP significantly empowers the attacker.

There are no function call gadgets in data-oriented programming, as it does not change the control data.

- Operate on data to achieve arbitrary computation

AAAI'21, PDF

Abstract

Natural language comments convey key aspects of source code such as implementation, usage, and pre- and postconditions. Failure to update comments accordingly when the corresponding code is modified introduces inconsistencies, which is known to lead to confusion and software bugs. In this paper, we aim to detect whether a comment becomes inconsistent as a result of changes to the corresponding body of code, in order to catch potential inconsistencies just-in-time, i.e., before they are committed to a code base. To achieve this, we develop a deep-learning approach that learns to correlate a comment with code changes. By evaluating on a large corpus of comment/code pairs spanning various comment types, we show that our model outperforms multiple baselines by significant margins. For extrinsic evaluation, we show the usefulness of our approach by combining it with a comment update model to build a more comprehensive automatic comment maintenance system which can both detect and resolve inconsistent comments based on code changes.

- Learn on AST code edit (GGNN, gated graph neural networks)

- BiGRU encoding

- Use in post hoc and just-in-time from commit

We introduce a new concept called brokered delegation. Brokered delegation allows users to flexibly delegate credentials and rights for a range of service providers to other users and third parties. We explore how brokered delegation can be implemented using novel trusted execution environments (TEEs). We introduce a system called DelegaTEE that enables users (Delegatees) to log into different online services using the credentials of other users (Owners). Credentials in DelegaTEE are never revealed to Delegatees and Owners can restrict access to their accounts using a range of rich, contextually dependent delegation policies.

DelegaTEE fundamentally shifts existing access control models for centralized online services. It does so by using TEEs to permit access delegation at the user's discretion. DelegaTEE thus effectively reduces mandatory access control (MAC) in this context to discretionary access control (DAC). The system demonstrates the significant potential for TEEs to create new forms of resource sharing around online services without the direct support from those services.

We present a full implementation of DelegaTEE using Intel SGX and demonstrate its use in four real-world applications: email access (SMTP/IMAP), restricted website access using a HTTPS proxy, e-banking/credit card, and a third-party payment system (PayPal).

- Account sharing: delegate the credentials to other users securely

- Centrally and P2P

Semantic Inconsistency

- Bugs as Deviant Behavior: A General Approach to Inferring Errors in Systems Code

This is a very interesting paper, maybe the earliest work in this area. The idea is simple: some operations are based on implicit assumptions. For example, dereference implies a valid pointer.

- Detecting Missing-Check Bugs via Semantic- and Context-Aware Criticalness and Constraints Inferences PDF

Solely focus on missing checks in Linux kernel.

- APISAN: Sanitizing API Usages through Semantic Cross-checking PDF

To find API usage errors, APISAN automatically infers semantic correctness, called semantic beliefs, by analyzing the source code of different uses of the API.

the more API patterns developers use in similar contexts, the more confidence we have about the correct API usage.

This is a probabilistic approach. Symbolic execution can help to construct a "semantic belief" of the outcome (and pre- and post- conditions) of executing an API, and such construction will be more and more precise as there are more samples in the dataset.

This work is based mainly on relaxed symbolic execution.

- Scalable and systematic detection of buggy inconsistencies in source code Paper

Analysis of bugs on cloned code. Searching: hash of code snippet, AST level.

- Gap between theory and practice: an empirical study of security patches in solidity

- Which bugs are missed in code reviews: An empirical study on SmartSHARK dataset

Semantic bugs are mostly missed (51.34%) from the review process, 7 out of 96 are typos.

- VarCLR: Variable Semantic Representation Pre-training via Contrastive Learning

- Bug characteristics in open source software

Careless programming causes many bugs in the evaluated software. For example, simple bugs such as typos account for 7.8–15.0 % of semantic bugs in Mozilla, Apache, and the Linux kernel

- Automating Code Review Activities by Large-Scale Pre-training

ICSE'19

Side-channel attacks allow an adversary to uncover secret program data by observing the behavior of a program with respect to a resource, such as execution time, consumed memory or response size. Side-channel vulnerabilities are difficult to reason about as they involve analyzing the correlations between resource usage over multiple program paths. We present DifFuzz, a fuzzing-based approach for detecting side-channel vulnerabilities related to time and space. DifFuzz automatically detects these vulnerabilities by analyzing two versions of the program and using resource-guided heuristics to find inputs that maximize the difference in resource consumption between secret-dependent paths. The methodology of DifFuzz is general and can be applied to programs written in any language. For this paper, we present an implementation that targets analysis of Java programs, and uses and extends the Kelinci and AFL fuzzers. We evaluate DifFuzz on a large number of Java programs and demonstrate that it can reveal unknown side-channel vulnerabilities in popular applications. We also show that DifFuzz compares favorably against Blazer and Themis, two state-of-the-art analysis tools for finding side-channels in Java programs.

- How can a untrusted hypervisor load the disk/image of a CVM in a trustworthy way?

- How to pass the keys from the trusted user to the VM in a secure way?

This document (Confidential Computing secrets) specifies that:

Confidential Computing (coco) hardware such as AMD SEV (Secure Encrypted Virtualization) allows guest owners to inject secrets into the VMs memory without the host/hypervisor being able to read them. In SEV, secret injection is performed early in the VM launch process, before the guest starts running.

However, it's still not clear to me how the secrets are passed to the VM securely. I guess this can be a bit different for SEV and TDX.

Fortunately, Linux provides a good documentation explaining the details here for SEV.

Notably, there is a command called KVM_SEV_LAUNCH_SECRET, documented like this:

KVM_SEV_LAUNCH_SECRETThe KVM_SEV_LAUNCH_SECRET command can be used by the hypervisor to inject secret data after the measurement has been validated by the guest owner.Parameters (in): struct kvm_sev_launch_secretReturns: 0 on success, -negative on error

The structure looks like this:

struct kvm_sev_launch_secret {

__u64 hdr_uaddr; /* userspace address containing the packet header */

__u32 hdr_len;

__u64 guest_uaddr; /* the guest memory region where the secret should be injected */

__u32 guest_len;

__u64 trans_uaddr; /* the hypervisor memory region which contains the secret */

__u32 trans_len;

};It seems like there is a mechanism for the CVM to take secrets from the host memory region.

- Interactive shell

docker run -it <image name> [shell] - Show current containers

docker container ls - Commit changes

docker commit <container id> [name] - Attach a running container

docker attach <container id>

- Run with SGX

docker run -it -v /var/run/aesmd:/var/run/aesmd --device=/dev/isgx [image name]

Other Commands

- show network configs of containers

docker network inspect bridge

Abstract

Dune is a system that provides applications with direct but safe access to hardware features such as ring protection, page tables, and tagged TLBs, while preserving the existing OS interfaces for processes. Dune uses the virtualization hardware in modern processors to provide a process, rather than a machine abstraction. It consists of a small kernel module that initializes virtualization hardware and mediates interactions with the kernel, and a user-level library that helps applications manage privileged hardware features. We present the implementation of Dune for 64bit x86 Linux. We use Dune to implement three userlevel applications that can benefit from access to privileged hardware: a sandbox for untrusted code, a privilege separation facility, and a garbage collector. The use of Dune greatly simplifies the implementation of these applications and provides significant performance advantages.

Methodology

Turn user-level processes into a lightweight VM-abstraction. This achieves isolation while enhances performance dramatically on syscalls, interrupts and page table operations.

Such abstraction is done by Dune kernel module on the host (VMX root, ring 0), and a libDune in Dune-mode process (VMX non-root, ring 0),

Main complexity comes from exposing EPT, handling syscalls/interrupts, and state registers.

Overheads (compared to native Linux)

- getpid (syscall-vmcall) - page fault - page walk (TLB miss)

Why these two have overhead?

Enhancements

- ptrace (intercept VMCALL) - trap (in VM) - appel1 & appel2 (memory management performance measurement)

Old version: Binary Compatibility For SGX Enclaves

Yet another middleware to support unmodified binary to run in SGX enclave. This is done through dynamically rewrite binary inside the encalve.

Enclaves, such as those enabled by Intel SGX, offer a hardware primitive for shielding user-level applications from the OS. While enclaves are a useful starting point, code running in the enclave requires additional checks whenever control or data is transferred to/from the untrusted OS. The enclave-OS interface on SGX, however, can be extremely large if we wish to run existing unmodified binaries inside enclaves. This paper presents Ratel, a dynamic binary translation engine running inside SGX enclaves on Linux. Ratel offers complete interposition, the ability to interpose on all executed instructions in the enclave and monitor all interactions with the OS. Instruction-level interposition offers a general foundation for implementing a large variety of inline security monitors in the future.

We take a principled approach in explaining why complete interposition on SGX is challenging. We draw attention to 5 design decisions in SGX that create fundamental trade-offs between performance and ensuring complete interposition, and we explain how to resolve them in the favor of complete interposition. To illustrate the utility of the Ratel framework, we present the first attempt to offer binary compatibility with existing software on SGX. We report that Ratel offers binary compatibility with over 200 programs we tested, including micro-benchmarks and real applications such as Linux shell utilities. Runtimes for two programming languages, namely Python and R, tested with standard benchmarks work out-of-the-box on Ratel without any specialized handling.

Abstract

There has been interest in mechanisms that enable the secure use of legacy code to implement trusted code in a Trusted Execution Environment (TEE), such as Intel SGX. However, because legacy code generally assumes the presence of an operating system, this naturally raises the spectre of Iago attacks on the legacy code. We observe that not all legacy code is vulnerable to Iago attacks and that legacy code must use return values from system calls in an unsafe way to have Iago vulnerabilities.

Based on this observation, we develop Emilia, which automatically detects Iago vulnerabilities in legacy applications by fuzzing applications using system call return values. We use Emilia to discover 51 Iago vulnerabilities in 17 applications, and find that Iago vulnerabilities are widespread and common. We conduct an in-depth analysis of the vulnerabilities we found and conclude that while common, the majority (82.4%) can be mitigated with simple, stateless checks in the system call forwarding layer, while the rest are best fixed by finding and patching them in the legacy code. Finally, we study and evaluate different trade-offs in the design of Emilia.

- Target: SGX Applications running inside a middleware

- Method: Fuzzing

- Tool:

strace(to intercept syscall return value)

Fuzzing Strategies

- Target selection (syscalls)

- Extract branch conditions depending on the return value from syscalls

- Random/valid/invalid return values

- Different return fields

Evaluation

to be continued

Abstract

Formal verification can provide the highest degree of software assurance. Demand for it is growing, but there are still few projects that have successfully applied it to sizeable, real-world systems. This lack of experience makes it hard to predict the size, effort and duration of verification projects. In this paper, we aim to better understand possible leading indicators of proof size. We present an empirical analysis of proofs from the landmark formal verification of the seL4 microkernel and the two largest software verification proof developments in the Archive of Formal Proofs. Together, these comprise 15,018 individual lemmas and approximately 215,000 lines of proof script. We find a consistent quadratic relationship between the size of the formal statement of a property, and the final size of its formal proof in the interactive theorem prover Isabelle. Combined with our prior work, which has indicated that there is a strong linear relationship between proof effort and proof size, these results pave the way for effort estimation models to support the management of large-scale formal verification projects.

Abstract

Security inspection and testing require experts in security who think like an attacker. Security experts need to know code locations on which to focus their testing and inspection efforts. Since vulnerabilities are rare occurrences, locating vulnerable code locations can be a challenging task. We investigated whether software metrics obtained from source code and development history are discriminative and predictive of vulnerable code locations. If so, security experts can use this prediction to prioritize security inspection and testing efforts. The metrics we investigated fall into three categories: complexity, code churn, and developer activity metrics. We performed two empirical case studies on large, widely used open-source projects: the Mozilla Firefox web browser and the Red Hat Enterprise Linux kernel. The results indicate that 24 of the 28 metrics collected are discriminative of vulnerabilities for both projects. The models using all three types of metrics together predicted over 80 percent of the known vulnerable files with less than 25 percent false positives for both projects. Compared to a random selection of files for inspection and testing, these models would have reduced the number of files and the number of lines of code to inspect or test by over 71 and 28 percent, respectively, for both projects.

Discussion

This is an interesting work, analyzing factors which can be used as the indicator to predict which file(s) in a project may contain vulnerabilities. But there are still several questions:

- Can these conclusions/observations be applied to other projects/languages?

- Is there a universal threshold to predict if a source file contain a bug? Or these criteria are just project-wise.

- If single factors already do well for prediction, why do we (in practice) consider multiple factors?

Abstract

Fuzz testing has enjoyed great success at discovering security critical bugs in real software. Recently, researchers have devoted significant effort to devising new fuzzing techniques, strategies, and algorithms. Such new ideas are primarily evaluated experimentally so an important question is: What experimental setup is needed to produce trustworthy results? We surveyed the recent research literature and assessed the experimental evaluations carried out by 32 fuzzing papers. We found problems in every evaluation we considered. We then performed our own extensive experimental evaluation using an existing fuzzer. Our results showed that the general problems we found in existing experimental evaluations can indeed translate to actual wrong or misleading assessments. We conclude with some guidelines that we hope will help improve experimental evaluations of fuzz testing algorithms, making reported results more robust.

This is a very interesting paper. It points out some "unscientific" aspects in fuzzing, including:

- Not unified test suite and various fuzzing targets (also different versions)

- Important factors like execution time and seed selection

They suggest using more statistical methodology (e.g., statistical tests) to support one fuzzer beats another.

- Interestingly, there is a new SoK paper in 2024 discussing this similar topic: SoK: Prudent Evaluation Practices for Fuzzing.

Pros

- Extremely extensive evaluation

- Has (a little) explanation

- Evaluate off-the-shelf and self-trained LLMs

- Parameter Sweeping

Gadgets

We note that restricting our evaluation to short, localized patches does not necessarily harm the validity of our analysis, as prior work has found that security patches tend to be more localized, have fewer source code modifications, and tend to affect fewer functions, compared to non-security bugs >>>

USENIX'19 Paper

System

Attacks

- Monitoring nPT (nested page table) and gPT (guest page table)

- Page faults => a list of accessed pages => find the actual address of the target page

- Decryption oracle: move content of the ciphertext to the SWIOTLB

- Let it be decrypted

Technical Details

- Monitoring/MITM the DMA operations

- Pattern matching to find the accessed address in private memory

IOremapto replace ciphertext- QEMU notifies the VM about DMA write. Does it notify the device to read DMA?

Abstract

We present PIDGIN, a program analysis and understanding tool that enables the specification and enforcement of precise application-specific information security guarantees. PIDGIN also allows developers to interactively explore the information flows in their applications to develop policies and investigate counter-examples. PIDGIN combines program dependence graphs (PDGs), which precisely capture the information flows in a whole application, with a custom PDG query language. Queries express properties about the paths in the PDG; because paths in the PDG correspond to information flows in the application, queries can be used to specify global security policies. PIDGIN is scalable. Generating a PDG for a 330k line Java application takes 90 seconds, and checking a policy on that PDG takes under 14 seconds. The query language is expressive, supporting a large class of precise, application-specific security guarantees. Policies are separate from the code and do not interfere with testing or development, and can be used for security regression testing. We describe the design and implementation of PIDGIN and report on using it: (1) to explore information security guarantees in legacy programs; (2) to develop and modify security policies concurrently with application development; and (3) to develop policies based on known vulnerabilities.

How PDG looks like

Methodology

- Generate PDG for a Program

- Query the graph with PidginQL (according to the property to check)

- Check the returned subgraph (if it's empty in most cases)

Comments

This seems to be a lightweight method for validating the property of code. One thing worth notice is that the generation of PDG takes relatively long while, whereas querying it takes much less time (~5X). The properties to validate is dependent on the program, which means for different programs, the query could be different.

However, this method lacks formal foundation. The soundness and completeness are not covered in this paper, and I'm not sure about the reliability of such PDG-based methods.

Abstract

Industries and governments are increasingly compelled by regulations and public pressure to handle sensitive information responsibly. Regulatory requirements and user expectations may be complex and have subtle implications for the use of data. Information flow properties can express complex restrictions on data usage by specifying how sensitive data (and data derived from sensitive data) may flow throughout computation. Controlling these flows of information according to the appropriate specification can prevent both leakage of confidential information to adversaries and corruption of critical data by adversaries. There is a rich literature expressing information flow properties to describe the complex restrictions on data usage required by today’s digital society. This monograph summarizes how the expressiveness of information flow properties has evolved over the last four decades to handle different threat models, computational models, and conditions that determine whether flows are allowed. In addition to highlighting the significant advances of this area, we identify some remaining problems worthy of further investigation.

Considerations

Theoretical Foundations

- Lattice theory

- Noninterference

- Logic models (e.g., temporal logic)

Threat Models

- Termination: does termination leaks high*?

- Time: different time

- Interaction: input/output flow

- Program code

Computational Models

- Nondeterminism: the program is not deterministic

- Composition of systems: e.g., feedback: the output becomes next input. Composition can lead to nondeterminism

- Concurrency

Re(de)classification

- Need to consider: what, where, when, and who

- Introduce Delimited Release to declassify

S-FaaS: Trustworthy and Accountable Function-as-a-Service using Intel SGX

- Resource accounting on SGX “enclaved” FaaS.

- Trusted timer: built using TSX + additional timer thread

- Model: function trusted by user, but not service provider(platform) => sandbox

- KMS, transitive attestation, encryption

- Implementation on Apache OpenWhisk

Towards Demystifying Serverless Machine Learning Training

- Implement a serverless distributed ML framework, LambdaML, including distributed optimization, communication (with a storage server) and synchronization

- Compare the FaaS and IaaS solution for distributed ML.

Trust more, serverless

- JS FaaS in SGX enclaves

- Google V8 engine/Duntape + SGX LKL + Apache OpenWhisk

- Key management

- Parallel, warm start, adjust to load

Clemmys: Towards Secure Remote Execution in FaaS

- SGX2: DMM to speedup enclave init

- OpenWhisk + Scone + Palaemon(KMS)

- Gateway(T) + Controller(U) + Worker(T): not all in the enclave

- Features: function chaining & verification

- Functions should be manually inspected

Other related papers

Confidential Serverless Made Efficient with Plug-In Enclaves

Facts

Compiler optimization passes can introduce new vulnerabilities into correctly written code. For example, an optimization pass may introduce branching instructions in originally branchless C code [46].

Idea

Constant-time in(similar to) C is not very easy:

- Compiler may optimize the CT instructions away with branches

- CT code is not easy to write in C (and hard to read)

- The generated CT code is not very efficient (e.g., not use CT-related instructions like

cmov,adc)

FaCT also has a IFC label on data to determine is security level.

Applying cluster on code to find inconsistency.

Key insight: Our approach is inspired by the observation that many bugs in software manifest as inconsistencies deviating from their non-buggy counterparts, namely the code snippets that implement the similar logic in the same codebase. Such bugs, regardless of their types, can be detected by identifying functionally-similar yet inconsistent code snippets in the same codebase

Pros

- Two-level clustering

- Embedding on program dependency graph

- Found bugs (at function level) in large projects

- Embedding on code structures

- Generality

Cons

- Need repo-specific training

- Literals are removed at the IR level

To abstract Constructs, we preserve only the variable types for each program statement and remove all variable names and versions.

- Needs repository-specific configuration of thresholds

- Still very high FP

Others

- Granularity at the function level

If an inconsistent cluster contains more than a fixed number (e.g., 2) of deviating nodes (i.e., nodes in Table 2), the inconsistency is deprioritized because it is unlikely to be a true inconsistency (i.e., a single inconsistency rarely involves many deviations).

# Rebase

## Change last commit

git commit --amend --date "$(date)"

# MISC

## Add tag

git tag -a <tag> <commit>

git push --tags

## Delete file in commit (but not in FS)

git rm <file_name> --cached

Partial GPU

Passing a partial GPU to a VM using vGPU and SRIOV.

This shared case can be dangerous.

Isolation inside the CVM

How exactly does the GPU access the CVM's memory? Part of the memory is marked as shared, while encrypted.

The protected file system is first initialized by function pal_linux_main (in db_main.c).

PF initialization

The init function is init_protected_files in enclave_pf.c.

int init_protected_files(void) {

int ret;

pf_debug_f debug_callback = NULL;

#ifdef DEBUG

debug_callback = cb_debug;

#endif

pf_set_callbacks(cb_read, cb_write, cb_truncate, cb_aes_gcm_encrypt, cb_aes_gcm_decrypt,

cb_random, debug_callback);

/* if wrap key is not hard-coded in the manifest, assume that it was received from parent or

* it will be provisioned after local/remote attestation; otherwise read it from manifest */

char* protected_files_key_str = NULL;

ret = toml_string_in(g_pal_state.manifest_root, "sgx.protected_files_key",

&protected_files_key_str);

if (ret < 0) {

log_error("Cannot parse \'sgx.protected_files_key\' "

"(the value must be put in double quotes!)\n");

return -PAL_ERROR_INVAL;

}

if (protected_files_key_str) {

if (strlen(protected_files_key_str) != PF_KEY_SIZE * 2) {

log_error("Malformed \'sgx.protected_files_key\' value in the manifest\n");